-

Notifications

You must be signed in to change notification settings - Fork 4.7k

Graph-based Replay Algorithm and Other Latency/Throughput Changes #27720

Conversation

| } | ||

| } | ||

|

|

||

| impl Replayer { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@apfitzge i think we can probably use this for banking stage since it's pretty generic

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah looks like a lot of this can be reused for banking. Still working in incremental steps to get banking stage into a state where we can do it 😄

| let mut timings = ExecuteTimings::default(); | ||

|

|

||

| let txs = vec![tx_and_idx.0]; | ||

| let mut batch = |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

expecting some comments on this :)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ha, this does seem odd. I'm guessing that you've left it as a batch here for a few reasons:

- that's how it's executed/committed

- this will be easier to re-use in the generic scheduler

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yeah.. right now im assuming the scheduler algorithm is perfect, doesn't have any lock failures. it seems like that's okay, but its a pretty big change from what the system does now

| /// A handle to the replayer. Each replayer handle has a separate channel that responses are sent on. | ||

| /// This means multiple threads can have a handle to the replayer and results are sent back to the | ||

| /// correct replayer handle each time. | ||

| impl ReplayerHandle { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

thoughts on naming wrt this and struct below?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think they are fine. Only real critique would be that the file is named executor.rs so its a bit odd these aren't named similarly.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Had this in my mind for past few days, not a strong opinion, but ReplayExecutor or something a long those lines is preferable to me

| transactions_indices_to_schedule.extend( | ||

| transactions | ||

| .iter() | ||

| .cloned() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

we did an into_iter() previously, not sure if that would be better than cloning here.

| .for_each( | ||

| |ReplayResponse { | ||

| result, | ||

| timing: _, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

can we remove this if we aren't going to use it?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yeah lemme figure out where it was used and add back in!

| timing: _, | ||

| batch_idx, | ||

| }| { | ||

| let batch_idx = batch_idx.unwrap(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If we always unwrap these from the result, can we remove the Option?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

the idea here was that if we decide to use it for banking stage, we might not have a batch index. but ill go ahead and make it a usize and we can circle back to banking stage stuff later

| if let Some((_, err)) = transaction_results | ||

| .iter() | ||

| .enumerate() | ||

| .find(|(_, r)| r.is_err()) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This iteration seems unncessary. Could we just have a mut bool seen_error = false; and set it to true at line 185 if there's an error?

If I'm missing something, and we can't then we should at least remove the enumerate() that isn't being used.

| .enumerate() | ||

| .find(|(_, r)| r.is_err()) | ||

| { | ||

| while processing_state |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In contrast to above comment, I think this is probably fine since we'll only do this iteration if ew hit an error.

|

|

||

| let pre_process_units: u64 = aggregate_total_execution_units(timings); | ||

|

|

||

| let (tx_results, balances) = batch.bank().load_execute_and_commit_transactions( |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: seems odd to me that we use the passed Arc<Bank> everywhere else, but then the bank on the batch for this.

There are no situations where these should be different, so better to be consistent imo.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ah yeah, lemme use bank here

| let mut timings = ExecuteTimings::default(); | ||

|

|

||

| let txs = vec![tx_and_idx.0]; | ||

| let mut batch = |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ha, this does seem odd. I'm guessing that you've left it as a batch here for a few reasons:

- that's how it's executed/committed

- this will be easier to re-use in the generic scheduler

| balances, | ||

| token_balances, | ||

| rent_debits, | ||

| transaction_indexes.to_vec(), |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

isn't this already a vec?

| // build a map whose key is a pubkey + value is a sorted vector of all indices that | ||

| // lock that account | ||

| let mut indices_read_locking_account = HashMap::new(); | ||

| let mut indicies_write_locking_account = HashMap::new(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| let mut indicies_write_locking_account = HashMap::new(); | |

| let mut indices_write_locking_account = HashMap::new(); |

will also have to change the below references to the variable.

| .map(|(idx, account_locks)| { | ||

| // user measured value from mainnet; rarely see more than 30 conflicts or so | ||

| let mut dep_graph = HashSet::with_capacity(DEFAULT_CONFLICT_SET_SIZE); | ||

| let readlock_accs = account_locks.writable.iter(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I get what's going on, but I think the naming of these readlock_accs and writelock_accs can be confusing at a glance. Still trying to come up with a better name though...

| if let Some(err) = tx_account_locks_results.iter().find(|r| r.is_err()) { | ||

| err.clone()?; | ||

| } | ||

| let transaction_locks: Vec<_> = tx_account_locks_results | ||

| .iter() | ||

| .map(|r| r.as_ref().unwrap()) | ||

| .collect(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You can avoid the double iteration & the unwrap() by collecting Vec<Result<T, E>> into Result<Vec<T>, E>

| if let Some(err) = tx_account_locks_results.iter().find(|r| r.is_err()) { | |

| err.clone()?; | |

| } | |

| let transaction_locks: Vec<_> = tx_account_locks_results | |

| .iter() | |

| .map(|r| r.as_ref().unwrap()) | |

| .collect(); | |

| let transaction_locks = tx_account_locks_results | |

| .iter() | |

| .map(|r| r.as_ref()) | |

| .collect::<std::result::Result<Vec<&TransactionAccountLocks>, _>>() | |

| .map_err(|e| e.clone())?; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Perhaps taking it a step further and skipping these 2 full iterations entirely by changing the format of the next one as:

for (idx, tx_account_locks) in transaction_locks.iter().enumerate() {

let tx_account_locks = tx_account_locks.as_ref().map_err(|e| e.clone())?;

for account in &tx_account_locks.readonly {

// ...

}

for account in &tx_account_locks.writable {

// ...

}

}| // more entries may have been received while replaying this slot. | ||

| // looping over this ensures that slots will be processed as fast as possible with the | ||

| // lowest latency. | ||

| while did_process_entries { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Doesn't this give preference to the current bank over other banks?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

seems ideal? you nede to finish bank before you can vote and work on next one

|

@buffalu doesn't this node looks even better ? See |

that one also looks good, but hard to know what kinda hardware they're running. my setup is running on the same hardware in same data center |

7502P accounts on nvme. i think the accounts store is higher bc im not batch writing like yours might be during replay. its only writing one tx worth of accounts at a time compared to multiple (potentially in parallel). https://www.cpubenchmark.net/compare/AMD-EPYC-7443P-vs-AMD-EPYC-7502P/4391vs3538 are you running stock 1.10? |

|

im not running it from 1.10 on any servers right now, but lemme spin one up and compare for slots at the same time; will send graph over later today. |

running 1.10.38 plus a very small and risk less patch. I plan to issue a PR in a day or two If you share WS endpoint I can run some compare utils |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

some higher level comments that the previous round.

| @@ -367,6 +380,7 @@ fn bench_process_entries(randomize_txs: bool, bencher: &mut Bencher) { | |||

| &keypairs, | |||

| initial_lamports, | |||

| num_accounts, | |||

| &replayer_handle, | |||

| ); | |||

| }); | |||

| } | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

think I made a similar comment on the previous PR, but it'd be good if we could join the replayer threads here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

sg! will def do before cleaning up, would like to get high-level comments out of the way before going in and fixing/adding tests jjust to make sure on right path

| ) -> Vec<ReplaySlotFromBlockstore> { | ||

| // Make mutable shared structures thread safe. | ||

| let progress = RwLock::new(progress); | ||

| let longest_replay_time_us = AtomicU64::new(0); | ||

|

|

||

| let bank_slots_replayers: Vec<_> = active_bank_slots |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

naming nit: makes it seem like there are multiple replayers, but these are actually just different handles

| /// A handle to the replayer. Each replayer handle has a separate channel that responses are sent on. | ||

| /// This means multiple threads can have a handle to the replayer and results are sent back to the | ||

| /// correct replayer handle each time. | ||

| impl ReplayerHandle { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Had this in my mind for past few days, not a strong opinion, but ReplayExecutor or something a long those lines is preferable to me

| .collect() | ||

| } | ||

|

|

||

| pub fn join(self) -> thread::Result<()> { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is unused but should be in several places.

| // get_max_thread_count to match number of threads in the old code. | ||

| // see: https://github.com/solana-labs/solana/pull/24853 | ||

| lazy_static! { | ||

| static ref PAR_THREAD_POOL: ThreadPool = rayon::ThreadPoolBuilder::new() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Trying to get a clear summary of the different threads involved in the old replay and the new one.

Old:

PAR_THREAD_POOLS- this hasnum_cpus::get()threads. Used for rebatching and executing transactions.- main threads that use the thread_pool to execute transactions.

New:

PAR_THREAD_POOL- this hasnum_cpus::get()threads. It is used for building the dependency graphs in slots.Replayer.threads- depends on passed parameter, but seems to beget_thread_count()consistently, which isnum_cpus::get() / 2. These do the actual work of executing transactions during replay.- main threads that call into the

generate_dependency_graph.

Let me know if the above summary is incorrect.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yes that seems correct

| let txs = vec![tx_and_idx.0]; | ||

| let mut batch = | ||

| TransactionBatch::new(vec![Ok(())], &bank, Cow::Borrowed(&txs)); | ||

| batch.set_needs_unlock(false); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Couple of questions on this as I'm reading through again:

- We have a bank, why not grab the account locks for this account? Definitely performance wise not grabbing is more efficient, but it'd probably be better to do this replay separation, then removing locking in separate changes. Once we're convinced we don't need the locks anymore.

- I'm not entirely convinced this can actually get rid of the locks. If we are replaying active banks concurrently, is there something I'm not aware of that prevents those from touching the same account(s)?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yes, we can do that. if its locked, perhaps we should continually spin until its unlocked, perhaps with timeout or assert?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

alternatively, could grab the lock in caller?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I feel it's more intuitive to have the executor do the locking. Yeah we could either just spin until it's unlocked, or even add it to some queue of blocked work that is rechecked if unblocked every loop.

I imagine spinning is probably fine though, since it sounds like you've not hit this yet and is probably a rare edge case.

| cost_capacity_meter: Arc<RwLock<BlockCostCapacityMeter>>, | ||

| tx_cost: u64, | ||

| tx_costs: &[u64], |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I know this isn't your name, but would be nice if changed this to tx_costs_without_bpf to make it clear this does not have bpf costs.

| // build a resource-based dependency graph | ||

| let tx_account_locks_results: Vec<Result<_>> = transactions_indices_to_schedule | ||

| .iter() | ||

| .map(|(tx, _)| tx.get_account_locks(MAX_TX_ACCOUNT_LOCKS)) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

should we use bank.get_transaction_account_lock_limit() here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yes

major time differences probably related to cpu type, although #27786 seems like its on the right track |

|

this is running on a similar PR to this on 1.10

|

| replayer_handle, | ||

| )?; | ||

|

|

||

| Ok(true) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

seems like this'd be better than returning true here:

let slot_full = slot_entries_load_result.2;

confirm_slot_entries(...)?;

Ok(!slot_full)that is, don't bother calling confirm_slot() again if the slot is full since there's nothing more to process by definition. Ok(!bank.is_complete()) could be another way to express this.

Original discussion: #27632

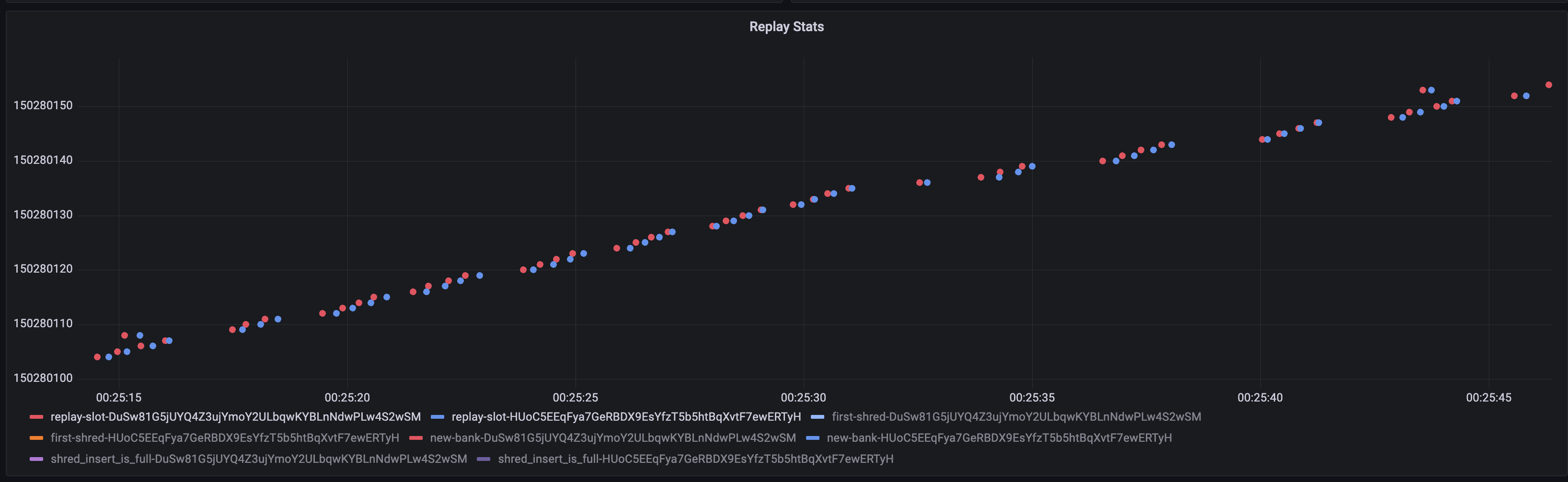

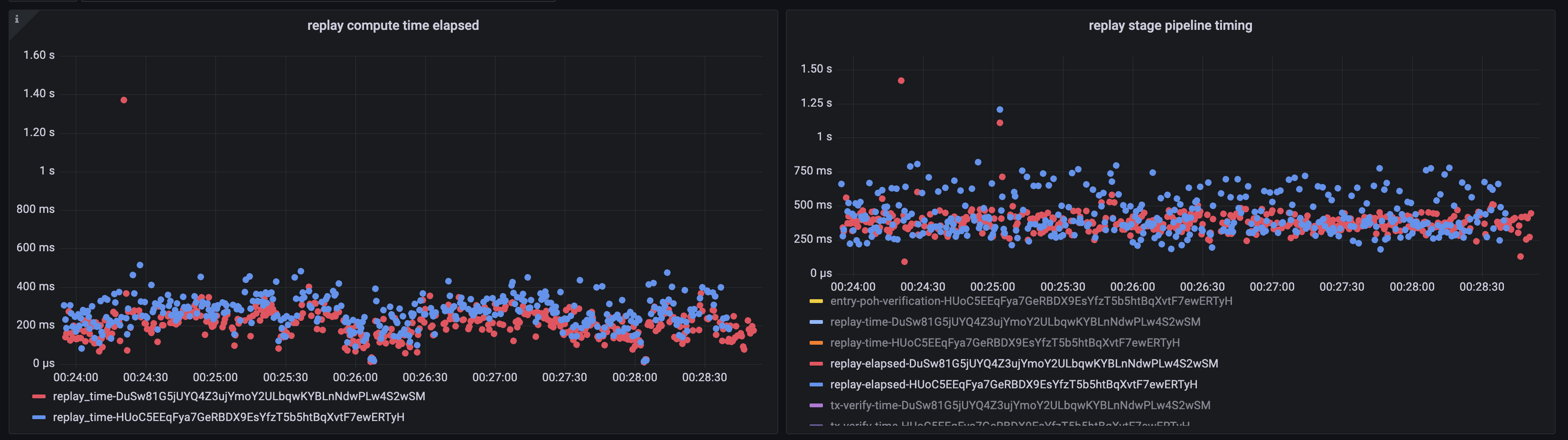

Metrics of similar code running on 1.10: https://metrics.solana.com:3000/d/KCLhfAbMz/replay?orgId=1&var-datasource=InfluxDB_main-beta&var-testnet=mainnet-beta&var-hostid=DuSw81G5jUYQ4Z3ujYmoY2ULbqwKYBLnNdwPLw4S2wSM&from=now-15m&to=now

Problem

given the following txs that write lock accounts A-H:

tx1: AB

tx2: BC

tx3: CD

tx4: EF

tx5: FG

tx6: GH

the current algorithm will replay in the following order sequentially: [AB], [BC], [CD], [EF], [FG], [GH]. we should be able to execute these one at a time and determine if any transactions become unblocked.

Summary of Changes

Proof

yellow is a leader setting a new bank in maybe_start_leader, red is the improved replay algorithm and the confirm_slot change, and blue is default. this is all on 1.10.

DuS running this modified code on 1.10

DuS constantly beating the unmodified validator by ~200ms on average